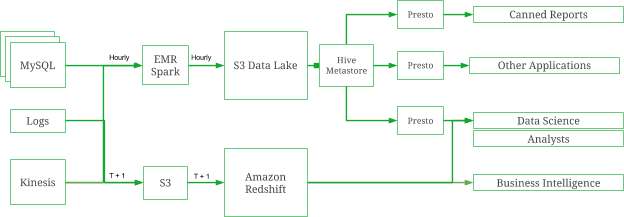

I think the right move here is to experiment with this in a local project, and see if we can develop something robust enough to add into dbt-redshift as a built-in materialization. I'd happily close #19 in favor of this issue if we can get some more traction this time around. Pretty darn cool! We opened a good first issue for that idea a long time ago, but we didn't get any immediate takers. a ref to that model downstream resolves to its Spectrum location, and the downstream model reads from its data in S3.you write to S3 directly from model SQL.This version feels much more akin to a model (logic-bearing, materialized object) than a source (pointer): as as a pretty special and different thing, which just happens to also be called external table. So: I think it's fair to treat create external table. (There's also a bit more of this viewpoint reflected in dbt-labs/dbt-core#3391.) I agree that the lines can be blurry, and there's points in favor of either side. That issue is a bit lengthy, but it includes the rationale for the decision to treat external tables as sources (metadata pointers only) instead of models (logic-bearing, env-specific, materialized objects). A key outlier here is Apache Spark, where all tables are external tables some are managed, others have their location specified explicitly (which dbt-spark accomplishes via a location config).Ī lot of my thinking about how the dbt-external-tables package should work is based on this "read-only" view, reflected in dbt-labs/dbt-external-tables#1. The other major data warehouses conceive of external tables only as sources of data in external storage-there's always the option of offloading some data back to external storage via copy or unload, but the two aren't tightly linked. I believe Redshift/Spectrum is unique in its support of create external table. Thanks for opening my view, there's a crucial distinction here between "read-only" and "write-read" external tables-sources and sinks, if you will.

Are you interested in contributing this feature? AWS Glue / Athena) to query the results using existing analytical patterns. They can use dbt and the warehouse as an ephemeral compute / transform layer, and then persist the data to a file store, which enables other tools (e.g. Additional contextīelieve this is relevant for any of the databases currently supported in the external tables package:ĭbt Users who have existing infrastructure that leverages a more data lake centric approach for managing persistence will benefit from this. The goal here is to make that logic a materialization so that it can become part of the dbt run pipeline.

The implementation of create_external_table here accomplishes this when triggered by a run-operation.

0 kommentar(er)

0 kommentar(er)